-

OpenStack Ironic 独立部署入门详解:下

想在云环境中像管理虚拟机一样管理物理服务器吗?想用物理节点实现基础设施即代码(IaC)和 CI/CD 流水线吗?让我们通过一些实用、简单且可复现的示例,来揭开私有云基础设施和裸金属生命周期管理的一些神秘面纱,我们将从一个最小化的 OpenStack Ironic “独立”部署开始。

在我们的第一篇文章中,我们通过介绍“独立”配置的 OpenStack Ironic,开始探讨裸金属生命周期管理。

我们为实验环境奠定了基础,详细介绍了裸金属服务(bms)服务器的最小化设置,包括安装 Podman 和配置 OpenStack Kolla Ansible。我们涵盖了准备环境、安装 Ironic Python Agent (IPA) 以及执行初始 OpenStack 部署的必要步骤。

03 裸金属管理 - 配置待管理服务器的 BMC;

- 节点上线(Onboarding);

- 节点巡检(Inspecting);

- 清理(可选);

- 部署;

- 恢复无法访问的节点。

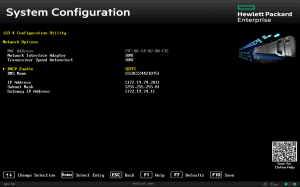

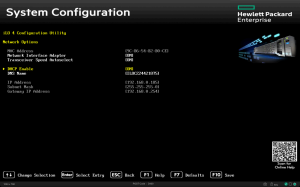

3.1 BMC配置

为了让 Ironic 能够管理物理节点,各个 BMC (基板管理控制器) 必须能通过 OOB/IB 网络 172.19.74.0/24 从 bms服务器访问。(对于本实验,一个所有服务都汇集于此的单一 LAN 网络 192.168.0.0/24 也是可以接受的),并需要提供相应的访问凭据。

专用于操作系统安装和带内管理的以太网卡必须在 BIOS 中启用 PXE 引导。

在服务器上,BMC 的 MAC 地址和访问凭据都列在一个专门的标签上。

让我们把待管理服务器的信息收集到一个方便的位置:

为了检查 BMC 是否可以通过 IPMI 和/或 Redfish 协议访问,我们来安装相应的命令行工具:

bms:~$ sudo apt install ipmitool redfishtool...kolla@bms:~$ redfishtool -r 172.19.74.201 -u ironic -p baremetal Systems{"@odata.context": "/redfish/v1/$metadata#Systems","@odata.id": "/redfish/v1/Systems/","@odata.type": "#ComputerSystemCollection.ComputerSystemCollection","Description": "Computer Systems view","Members": [{"@odata.id": "/redfish/v1/Systems/1/"}],"Members@odata.count": 1,"Name": "Computer Systems"}kolla@bms:~$ ipmitool -C 3 -I lanplus -H 172.19.74.202 \-U ironic -P baremetal chassis statusSystem Power : offPower Overload : falsePower Interlock : inactiveMain Power Fault : falsePower Control Fault : falsePower Restore Policy : previousLast Power Event :Chassis Intrusion : inactiveFront-Panel Lockout : inactiveDrive Fault : falseCooling/Fan Fault : falseFront Panel Control : none注意:在某些 BMC 上,使用 IPMI 和 Redfish 协议可能需要在 BIOS 设置中明确启用。 注册(enrollment)阶段发生在新服务器上线并由 Ironic 使用 openstack baremetal node create –driver … 命令进行管理时。

继续操作所需的信息是:

– BMC 类型 (例如, ipmi, redfish, ilo, idrac, 等) – BMC IP 地址及其相应的凭据 (带外)

– 将用于巡检、安装和可能恢复的网卡的 MAC 地址 (带内)

kolla@bms:~$ openstack baremetal node create \--name server01 --driver redfish \--driver-info redfish_address=https://172.19.74.201 \--driver-info redfish_verify_ca=False \--driver-info redfish_username=ironic \--driver-info redfish_password=baremetal \-f value -c uuid5113ab44-faf2-4f76-bb41-ea8b14b7aa92kolla@bms:~$ openstack baremetal node create \--name server02 --driver ipmi \--driver-info ipmi_address=172.19.74.202 \--driver-info ipmi_username=ironic \--driver-info ipmi_password=baremetal \-f value -c uuid5294ec18-0dae-449e-913e-24a0638af8e5kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | None | enroll | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | None | enroll | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+要管理这些服务器,必须使用 openstack baremetal node manage … 命令将它们声明为可管理 (manageable):

Ironic 将使用指定的硬件驱动程序(在本例中为 redfish)查询 BMC,并将状态从 enroll 转换到 verifying,最后到 manageable,并填充一些服务器属性,这些属性可以用 openstack baremetal node show <UUID|NAME> -f value -c properties 命令查询。

kolla@bms:~$ openstack baremetal node manage server01kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power off | verifying | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | None | enroll | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power off | manageable | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | None | enroll | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+kolla@bms:~$ openstack baremetal node show server01 -f value -c properties{'vendor': 'HPE'}注意:不幸的是,在这种情况下,不能使用“规范”名称(例如 server01);您必须明确使用其对应的 UUID。 3.2 BMC配置

OpenStack Ironic 中的“巡检”过程用于自动对裸金属服务器的硬件特性进行巡检。

这个过程使我们能够在实际安装之前收集各种有用的信息,例如磁盘、CPU 类型、RAM 和网卡。这些数据随后可用于指导和自定义安装本身。

kolla@bms:~$ time openstack baremetal node inspect server01 --waitWaiting for provision state manageable on node(s) server01real 6m26.435suser 0m1.213ssys 0m0.073s通过这样生成的节点清单,您可以使用 openstack baremetal node inventory save <UUID|NAME> 命令来检查节点,该命令返回一个 JSON 集合。为了更容易解释,建议使用 jq 或 yq 命令: kolla@bms:~$ openstack baremetal node inventory save server01 | \yq -r 'keys[]'inventoryplugin_datakolla@bms:~$ openstack baremetal node inventory save server01 | \yq -r '.inventory|keys[]'bmc_addressbmc_macbmc_v6addressbootcpudiskshostnameinterfacesmemorysystem_vendorkolla@bms:~$ openstack baremetal node inventory save server01 | \yq -y '.inventory.system_vendor'product_name: ProLiant ML350 Gen9 (776971-035)serial_number: CZ24421875manufacturer: HPfirmware:vendor: HPversion: P92build_date: 08/29/2024kolla@bms:~$ openstack baremetal node inventory save server01 | \yq -y '.inventory.boot'current_boot_mode: uefipxe_interface: 9c:b6:54:b2:b0:ca要列出网卡及其特性,我们可以使用 yq -y ‘.inventory.interfaces’ 命令过滤输出,甚至只过滤有连接链路的网卡 (has_carrier==true): kolla:~$ openstack baremetal node inventory save server01 | \yq -y '.inventory.interfaces|map_values(select(.has_carrier==true))'- name: eno1mac_address: 9c:b6:54:b2:b0:caipv4_address: 172.19.74.193ipv6_address: fe80::9eb6:54ff:feb2:b0ca%eno1has_carrier: truelldp: nullvendor: '0x14e4'product: '0x1657'client_id: nullbiosdevname: nullspeed_mbps: 1000pci_address: '0000:02:00.0'driver: tg3- name: eno2mac_address: 9c:b6:54:b2:b0:cbipv4_address: 192.168.0.195ipv6_address: fe80::9eb6:54ff:feb2:b0cb%eno2has_carrier: truelldp: nullvendor: '0x14e4'product: '0x1657'client_id: nullbiosdevname: nullspeed_mbps: 1000pci_address: '0000:02:00.1'driver: tg3有了这些信息,您就可以选择最佳的候选磁盘来安装操作系统。要指定使用磁盘 /dev/sdc,即序列号为 4C530001220528100484 的大约 30GB 的 SanDisk Ultra Fit,您可以设置 root_device 属性: kolla@bms:~$ openstack baremetal node set server01 \--property root_device='{"serial": "4C530001220528100484"}'kolla@bms:~$ openstack baremetal node show server01 -f json | \yq -y '.properties'vendor: HPEcpu_arch: x86_64root_device:serial: 4C5300012205281004843.3 清理(Cleaning)(可选)

如果硬件不是全新的,磁盘可能包含敏感数据,或者更糟的是,与新部署冲突(例如,先前安装在非目标磁盘上的操作系统)。

为了防止这些情况,使用 Ironic 通过代理提供的节点清理功能非常有用,命令为 openstack baremetal node clean <UUID>:

kolla@bms:~$ openstack baremetal node clean server01 \--clean-steps '[{"interface": "deploy", "step": "erase_devices_metadata"}]'强制性选项 –clean-steps 用于通过以下特定接口指定操作:power, management, deploy, firmware, bios, 和 raid。 对于通过 Ironic Python Agent (IPA) 管理的 deploy接口,可用的步骤有:

– erase_devices: 确保从磁盘上擦除数据。 – erase_devices_metadata: 只擦除磁盘元数据。

– erase_devices_express: 硬件辅助的数据擦除,目前仅 NVMe 支持。

注意:对于所有其他我们暂时跳过的“清理”选项(例如,固件升级、BIOS 重置、RAID 设置等),您可以参考 Ironic 文档中的节点清理章节。

3.4 部署(Deployment)

现在我们已经添加、分析和配置了要管理的系统 (openstack baremetal node [create|manage|inspect|clean]),我们将有一个处于 manageable 状态的节点列表:

kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power off | manageable | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+要使物理节点可用于部署,您需要将其声明为 available:

任何处于 manageable 状态的节点都有资格通过 openstack baremetal node provide <UUID|NAME> 命令变为 available。反向操作则通过 openstack baremetal node manage <UUID|NAME> 完成。

kolla@bms:~$ openstack baremetal node provide server01kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power off | available | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+kolla@bms:~$ openstack baremetal node validate server01+------------+--------+------------------------------------------------------------------------------------------------+| Interface | Result | Reason |+------------+--------+------------------------------------------------------------------------------------------------+| bios | False | Driver redfish does not support bios (disabled or not implemented). || boot | True | || console | False | Driver redfish does not support console (disabled or not implemented). || deploy | False | Node 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 failed to validate deploy image info. Some || | | parameters were missing. Missing are: ['instance_info.image_source'] || firmware | False | Driver redfish does not support firmware (disabled or not implemented). || inspect | True | || management | True | || network | True | || power | True | || raid | True | || rescue | False | Node 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 is missing 'instance_info/rescue_password'. It is || | | required for rescuing node. || storage | True | |+------------+--------+------------------------------------------------------------------------------------------------+我们可以使用 openstack baremetal node validate <UUID|NAME> 命令对要安装的节点进行最后检查(输出如上所示)。结果表明某些区域未被管理(值为 False): – bios: 在这种情况下,该功能已通过 enabled_bios_interfaces = no-bios 明确禁用。 – console: 此功能也已禁用 (enabled_console_interfaces = no-console)。

– deploy: 缺少 image_source 参数,该参数指定从何处获取要安装的操作系统镜像。

– firmware: 功能已禁用 (enabled_firmware_interfaces = no-firmware)。

– rescue: 缺少 rescue_password 参数。

在没有周边云环境的情况下,Ironic 需要知道从哪里获取要安装的镜像,可以直接通过文件或 URL。openstack baremetal node set 命令的 –instance-info 部分中的 image_source 参数正是用于此目的。

虽然可以使用互联网上的 URL,但为了优化带宽,最好使用本地存储库。在这种情况下,我们将使用 ironic_http 容器中的 Web 服务器以及挂载到其上的持久化 ironic 卷:

kolla:~$ sudo podman volume inspect ironic[{"Name": "ironic","Driver": "local","Mountpoint": "/var/lib/containers/storage/volumes/ironic/_data","CreatedAt": "2025-04-02T18:28:15.831223385+02:00","Labels": {},"Scope": "local","Options": {},"MountCount": 0,"NeedsCopyUp": true}]kolla:~$ sudo podman container inspect ironic_http | \yq '.[]|.Mounts[]|select(.Name=="ironic")'{"Type": "volume","Name": "ironic","Source": "/var/lib/containers/storage/volumes/ironic/_data","Destination": "/var/lib/ironic","Driver": "local","Mode": "","Options": ["nosuid","nodev","rbind"],"RW": true,"Propagation": "rprivate"}kolla:~$ sudo cat /etc/kolla/ironic-http/httpd.confListen 172.19.74.1:8089TraceEnable off<VirtualHost *:8089>LogLevel warnErrorLog "/var/log/kolla/ironic/ironic-http-error.log"LogFormat "%h %l %u %t \"%r\" %>s %b %D \"%{Referer}i\" \"%{User-Agent}i\"" logformatCustomLog "/var/log/kolla/ironic/ironic-http-access.log" logformatDocumentRoot "/var/lib/ironic/httpboot"<Directory /var/lib/ironic/httpboot>Options FollowSymLinksAllowOverride NoneRequire all granted</Directory></VirtualHost>由于卷 /var/lib/containers/storage/volumes/ironic/_data 被挂载到 ironic_http容器的 /var/lib/ironic下,并通过 http://172.19.74.1:8089 (Listen) 暴露了 /var/lib/ironic/httpboot目录 (DocumentRoot),我们可以将各种raw或qcow2格式的操作系统镜像复制到 /var/lib/containers/storage/volumes/ironic/_data/httpboot/<IMAGE> 目录,并以 http://172.19.74.1:8089/<IMAGE>的形式引用它们: kolla@bms:~$ sudo wget \-P /var/lib/containers/storage/volumes/ironic/_data/httpboot/ \https://cloud.debian.org/images/cloud/bookworm/latest/debian-12-nocloud-amd64.qcow2...debian-12-nocloud-amd64.qcow2 100%[========================================>] 398.39Mkolla@bms:~$ sudo wget \-P /var/lib/containers/storage/volumes/ironic/_data/httpboot/ \https://cloud.debian.org/images/cloud/bookworm/latest/debian-12-generic-amd64.qcow2...debian-12-generic-amd64.qcow2 100%[========================================>] 422.45Mkolla@bms:~$ sudo podman exec --workdir=/var/lib/ironic/httpboot \ironic_http sh -c 'sha256sum *.qcow2 >CHECKSUM'kolla@bms:~$ sudo podman exec ironic_http ls -la /var/lib/ironic/httpboottotal 1202312drwxr-xr-x 3 ironic ironic 4096 May 28 14:34 .drwxr-xr-x 8 ironic ironic 162 Apr 14 15:17 ..-rw-r--r-- 1 ironic ironic 1004 Apr 2 18:28 boot.ipxe-rw-r--r-- 1 root root 192 May 28 14:27 CHECKSUM-rw-r--r-- 1 ironic ironic 4843992 Apr 11 16:18 cp042886.exe-rw-r--r-- 1 root root 442970624 May 19 23:34 debian-12-generic-amd64.qcow2-rw-r--r-- 1 root root 417746432 May 19 23:50 debian-12-nocloud-amd64.qcow2-rw-r--r-- 1 ironic ironic 8388608 May 27 17:37 esp.img-rw-r--r-- 1 root root 533 May 27 17:40 inspector.ipxe-rw-r--r-- 1 root root 348999425 May 27 17:40 ironic-agent.initramfs-rw-r--r-- 1 root root 8193984 May 27 17:40 ironic-agent.kerneldrwxr-xr-x 2 ironic ironic 6 May 28 14:34 pxelinux.cfgkolla@bms:~$ curl -I http://172.19.74.1:8089/debian-12-nocloud-amd64.qcow2HTTP/1.1 200 OKDate: Wed, 28 May 2025 10:46:31 GMTServer: Apache/2.4.62 (Debian)Last-Modified: Mon, 19 May 2025 21:50:09 GMTETag: "18e64e00-635841d9c4640"Accept-Ranges: bytesContent-Length: 417746432注意:创建 CHECKSUM 文件是必要的,以允许 Ironic 验证镜像在从源服务器传输到裸金属节点的过程中没有被篡改。 我们准备好进行第一次测试部署了:

3.5.1 测试

作为第一次测试,我们可以使用名为“nocloud”的 Debian Cloud 镜像,引用为 `debian-12-nocloud-amd64.qcow2`,来验证该过程是否有效。不幸的是,这个镜像没有安装 cloud-init,它只允许直接从控制台无密码 root 访问,因为 SSH 服务未激活。

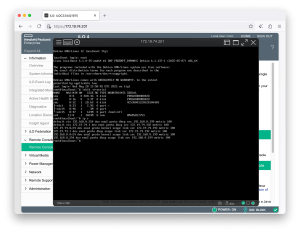

kolla@bms:~$ openstack baremetal node set server01 \--instance-info image_source=http://172.19.74.1:8089/debian-12-nocloud-amd64.qcow2 \--instance-info image_checksum=http://172.19.74.1:8089/CHECKSUMkolla@bms:~$ openstack baremetal node deploy server01kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power on | wait call-back | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power on | deploying | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power on | active | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+过程完成后,当相关节点的 Provisioning State 变为 active 时,我们可以直接从服务器的控制台或通过其 BMC 验证结果:

要回收此测试实例:

kolla@bms:~$ openstack baremetal node undeploy server01kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power on | deleting | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+kolla@bms:~$ openstack baremetal node list+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+| 5113ab44-faf2-4f76-bb41-ea8b14b7aa92 | server01 | None | power off | available | False || 5294ec18-0dae-449e-913e-24a0638af8e5 | server02 | None | power off | manageable | False || f0d3f50a-d543-4e3d-9b9a-ba719373ab58 | server03 | None | power off | manageable | False |+--------------------------------------+----------+---------------+-------------+--------------------+-------------+节点将被关闭,其 Provisioning State 将从 active 转换到 deleting,然后回到 available。 3.5.12 cloud-init

像之前的 debian-12-nocloud这样的静态镜像不提供配置灵活性。除非镜像是专门为满足您的需求(分区、网络、用户、软件等)而制作的,否则它们的用处往往有限。

我们可以通过使用包含 cloud-init 的镜像来达成目标:

“云镜像是操作系统模板,每个实例启动时都是其他实例的相同克隆。是用户数据赋予了每个不同的云实例其个性,而 cloud-init 就是自动将用户数据应用于实例的工具。”

为了向裸金属节点提供个性化指令,我们将使用节点自身磁盘的一部分,称为 Config Drive。在部署期间,必要的信息将以结构化文件的形式下载到文件系统中的这个 Config Drive 中。

假设我们要安装 server01节点,具有以下要求:

– ironic用户,预装了公共 SSH 密钥并具有执行 root 命令的 sudo 权限; – 要自动安装的软件:git 和 podman;

– LAN 上的网络接口配置为静态 IP 地址 192.168.0.11/24、默认网关和公共 DNS;

我们可以按如下方式进行:

kolla@bms:~$ ssh-keygenGenerating public/private rsa key pair.Enter file in which to save the key (/home/kolla/.ssh/id_rsa):Enter passphrase (empty for no passphrase):Enter same passphrase again:...kolla@bms:~$ RSA_PUB=$(cat ~/.ssh/id_rsa.pub)kolla@bms:~$ NODE=server01kolla@bms:~$ mkdir -p ~/ConfigDrive/$NODE/openstack/latestkolla@bms:~$ cat >~/ConfigDrive/$NODE/openstack/latest/meta_data.json <<EOF{"uuid": "$(openstack baremetal node show $NODE -f value -c uuid)","hostname": "$NODE"}EOFkolla@bms:~$ cat >~/ConfigDrive/$NODE/openstack/latest/user_data <<EOF#cloud-configpackage_update: truepackages:- git- podmanusers:- name: ironicshell: /bin/bashsudo: ["ALL=(ALL) NOPASSWD:ALL"]ssh_authorized_keys:- '$RSA_PUB'EOFkolla@bms:~$ cat >~/ConfigDrive/$NODE/openstack/latest/network_data.json <<EOF{"links": [{"id": "oob0","type": "phy","ethernet_mac_address": "9c:b6:54:b2:b0:ca"},{"id": "lan0","type": "phy","ethernet_mac_address": "9c:b6:54:b2:b0:cb"}],"networks": [{"id": "oob","type": "ipv4_dhcp","link": "oob0","network_id": "oob"},{"id": "lan","type": "ipv4","link": "lan0","ip_address": "192.168.0.11/24","gateway": "192.168.0.254","network_id": "lan"}],"services": [{"type": "dns","address": "8.8.8.8"},{"type": "dns","address": "8.8.4.4"}]}EOF注意:有关 network_data.json文件结构的更多信息,请参考其模式:https://opendev.org/openstack/nova/src/branch/master/doc/api_schemas/network_data.json 要执行利用 cloud-init的新部署,我们将 image_source的引用更改为 debian-12-generic-amd64.qcow2,并指定包含生成 Config Drive 所需文件的目录:

kolla@bms:~$ openstack baremetal node set server01 \--instance-info image_source=http://172.19.74.1:8089/debian-12-generic-amd64.qcow2 \--instance-info image_checksum=http://172.19.74.1:8089/CHECKSUMkolla@bms:~$ time openstack baremetal node deploy server01 \--config-drive=$HOME/ConfigDrive/server01 --waitWaiting for provision state active on node(s) server01real 12m3.542suser 0m0.863ssys 0m0.085s注意:如果我们之前没有用 openstack baremetal node undeploy <UUID|NAME>命令删除部署,仍然可以使用 openstack baremetal node set <UUID|NAME> –instance-info image_source=… 重置 image_source 变量,然后请求重建:openstack baremetal node rebuild <UUID|NAME> –config-drive=<PATH>。 3.5 恢复无法访问的节点(rescue)

有时会出错……IPA (Ironic Python Agent) 加载了,操作系统似乎也正确写入了磁盘,Config Drive 也看似安装好了。然而节点就是无法启动,或者启动了但网卡没有配置,管理员用户凭据似乎不正确,服务器显示器上一个错误信息一闪而过……总之,会出现各种问题。

要利用 IPA 作为恢复系统,节点必须处于 **active** 状态,并且可以通过以下命令调用:

kolla@bms:~$ openstack baremetal node show server01 -c provision_state+-----------------+--------+| Field | Value |+-----------------+--------+| provision_state | active |+-----------------+--------+kolla@bms:~$ time baremetal node rescue server01 \--rescue-password=<PASSWORD> --waitWaiting for provision state rescue on node(s) server01real 5m42.284suser 0m0.745ssys 0m0.045skolla@bms:~$ openstack baremetal node show server01 -c provision_state+-----------------+--------+| Field | Value |+-----------------+--------+| provision_state | rescue |+-----------------+--------+我们可以从 Ironic 的 DHCP 服务器日志(由一个运行 dnsmasq 的容器组成)中,根据用于部署的网卡的 MAC 地址来识别分配的 IP 地址: kolla@bms:~$ openstack baremetal port list -f value -c address \--node $(openstack baremetal node show server01 -f value -c uuid)9c:b6:54:b2:b0:cakolla@bms:~$ sudo grep -i 9c:b6:54:b2:b0:ca /var/log/kolla/ironic/dnsmasq.log | tail -1May 31 11:13:58 dnsmasq-dhcp[2]: DHCPACK(enp2s0) 172.19.74.192 9c:b6:54:b2:b0:ca最后,用 rescue 用户和先前定义的密码连接,以分析磁盘内容、系统和 cloud-init 日志等: kolla:~$ ssh rescue@172.19.74.192...[rescue ~]$ sudo -i[root ~]# lsblk -o+fstype,labelNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS FSTYPE LABELsda 8:0 0 3.3T 0 disksdb. 8:16 1 28.6G 0 disk|-sdb1 8:17 1 28.5G 0 part ext4|-sdb2 8:18 1 64.4M 0 part iso9660 config-2|-sdb14 8:30 1 3M 0 part`-sdb15 8:31 1 124M 0 part vfatsdc 8:32 0 838.3G 0 disksr0 11:0 1 1024M 0 rom[root ~]# mount /dev/sdb1 /mnt[root ~]# mount --bind /dev /mnt/dev[root ~]# mount --bind /sys /mnt/sys[root ~]# mount --bind /proc /mnt/proc[root ~]# chroot /mntroot:/# journalctl --list-bootsIDX BOOT ID FIRST ENTRY LAST ENTRY0 34c9893dd3ab4a928207f7da41ec1226 Tue 2025-06-03 10:31:39 UTC Tue 2025-06-03 12:17:50 UTCroot:/# journalctl -b 34c9893dd3ab4a928207f7da41ec1226...root:/# less /var/log/cloud-init.log...要检查 Config Drive 是如何写入的: [root ~]# mount -r LABEL=config-2 /media[root ~]# find /media/media/media/openstack/media/openstack/latest/media/openstack/latest/meta_data.json/media/openstack/latest/network_data.json/media/openstack/latest/user_data[root ~]# cat /media/openstack/latest/meta_data.json{"uuid": "5113ab44-faf2-4f76-bb41-ea8b14b7aa92","hostname": "server01"}[root ~]# cat /media/openstack/latest/user_data#cloud-configpackage_update: truepackages:- git- podmanusers:- name: ironicshell: /bin/bashsudo: ["ALL=(ALL) NOPASSWD:ALL"]ssh_authorized_keys:- 'sha-rsa ...'[root ~]# umount /media注意:要退出救援状态,不要从内部重启系统(例如 reboot, shutdown -r now 等);这只会启动磁盘上的操作系统,而不会改变 Ironic 数据库中的状态。相反,应从管理服务器使用 openstack baremetal node unrescue <UUID|NAME>`命令。 04 自动化与基础设施即代码 基础设施即代码 (IaC)是一种 IT 基础设施管理方法,其中服务器、网络、数据库和其他组件通过代码来定义和配置,而不是手动创建和管理。基础设施实际上变成了一组声明性的、可版本化的和可自动化的文件,从而能够以可重复、可靠和可扩展的方式构建复杂的环境。 出于演示目的,我们将使用两个最常用的:Terraform 和 Ansible。

4.1 Terraform/OpenTofu

在本章中,主要目标将是以最简单直接的方式,使用 Terraform/OpenTofu 复现我们到目前为止在命令行上为安装 Debian 12 云镜像所做的动作。

由 HashiCorp 开发的 Terraform 是用于编排云资源(AWS、Azure、GCP、OpenStack)和“传统”环境(VMware、Proxmox 等)的最广泛使用的工具之一。其语言允许清晰且可重复地描述基础设施所需的资源:服务器、网络、DNS、防火墙、存储等等。

OpenTofu,在 HashiCorp(最近被 IBM 收购)更改许可证后作为 Terraform 的一个分支诞生,完全保持了兼容性。由开源社区治理,其使命是确保 IaC 保持开放、可访问且独立于商业逻辑。

要安装 Terraform,请遵循以下链接的说明:

https://developer.hashicorp.com/terraform/install;

要安装 OpenTofu,请运行命令 apt install -y tofu。

让我们从在它们各自的注册表中搜索 OpenStack Ironic 的提供商开始:

– Terraform: https://registry.terraform.io/browse/providers – OpenTofu: https://search.opentofu.org/

我们会找到一个通用的提供商,由 Appkins Org 提供,并源自 OpenShift 项目Terraform provider for Ironic:appkins-org/ironic。

随后,在一个专门创建用于托管 Terraform/OpenTofu 文件的目录中,创建一个名为 main.tf 的文件,内容如下:

terraform {required_providers {ironic = {source = "appkins-org/ironic"version = "0.6.1"}}}provider "ironic" {url = "http://172.19.74.1:6385/v1"auth_strategy = "noauth"microversion = "1.96"timeout = 900}resource "ironic_node_v1" "server01" {name = "server01"inspect = true # 执行巡检clean = false # 不清理节点available = true # 使节点'available'ports = [{"address" = "9c:b6:54:b2:b0:ca""pxe_enabled" = "true"},]driver = "redfish"driver_info = {"redfish_address" = "https://172.19.74.201""redfish_verify_ca" = "False""redfish_username" = "ironic""redfish_password" = "baremetal"}}resource "ironic_deployment" "server01" {node_uuid = ironic_node_v1.server01.idinstance_info = {image_source = "http://172.19.74.1:8089/debian-12-generic-amd64.qcow2"image_checksum = "http://172.19.74.1:8089/CHECKSUM"}metadata = {uuid = ironic_node_v1.server01.idhostname = ironic_node_v1.server01.name}user_data = <<-EOT#cloud-configpackage_update: truepackages:- git- podmanusers:- name: ironicshell: /bin/bashsudo: ["ALL=(ALL) NOPASSWD:ALL"]ssh_authorized_keys:- 'ssh-rsa ...'EOT}在这个文件中,除了指定 appkins-org/ironic 提供商及其位置外,还定义了两个资源。第一个,类型为 ironic_node_v1,代表注册阶段与巡检阶段的结合。第二个,类型为 ironic_deployment,将处理部署阶段和相关 Config Drive 的创建。 要下载提供商及其依赖项,只需调用 Terraform/OpenTofu 的 init 过程:

kolla@bms:~/TF$ terraform init- 或 -kolla@bms:~/TF$ tofu initInitializing the backend...Initializing provider plugins...- Finding appkins-org/ironic versions matching "0.6.1"...- Installing appkins-org/ironic v0.6.1...- Installed appkins-org/ironic v0.6.1......Terraform/OpenTofu has been successfully initialized!同时,要执行实际部署,您使用 apply 过程: kolla@bms:~/TF$ terraform apply- 或 -kolla@bms:~/TF$ tofu apply...Plan: 2 to add, 0 to change, 0 to destroy.Do you want to perform these actions?Terraform will perform the actions described above.Only 'yes' will be accepted to approve.Enter a value: yes...ironic_deployment.server01: Creation complete after 10m32s [id=12016c80-be15-48ed-9b55-af00911c66b5]Apply complete! Resources: 2 added, 0 changed, 0 destroyed.4.2 Ansible 在本章中,目标是实现一个更复杂的部署,不仅包括操作系统配置,还包括安装一个容器编排平台,如 Kubernetes 的 all-in-one(单节点)设置。

Ansible 是一个开源自动化平台,以其简单和强大的功能而闻名。与其他自动化工具不同,Ansible 是无代理的,这意味着它不需要在其管理的节点上安装任何额外的软件。

话虽如此,其他自动化系统对于您的环境可能同样有效,甚至更合适,例如 Chef、Juju、[Puppet或 SaltStack,仅举几例。

4.2.1 Ansible Playbook

playbook 的结构将如下所示:

kolla@bms:~$ tree AnsibleAnsible├── config.yml├── deploy.yml├── group_vars│ └── all.yml└── roles├── ironic│ └── tasks│ └── main.yml└── k8s-aio├── defaults│ └── main.yml└── tasks├── configure.yml├── install.yml├── main.yml└── prepare.yml8 directories, 9 files在 config.yml 文件中,我们可以创建一个数据结构来表示要配置的节点列表 (nodes: []),尽量遵循 Ironic 所需的形式(bmc, root_device, instance_info, network_data等),同时添加一些额外信息,如 ansible_host 和 ansible_roles: # config.yml---user_data: |#cloud-configusers:- name: {{ default_user }}lock_passwd: falsesudo: ['ALL=(ALL) NOPASSWD:ALL']ssh_authorized_keys:- '{{ public_key }}'mounts:- ['swap', null]nodes:- name: server01ansible_host: "192.168.0.11"ansible_roles:- k8s-aiobmc:driver: redfishdriver_info:redfish_address: "https://172.19.74.201"redfish_verify_ca: falseredfish_username: "ironic"redfish_password: "baremetal"pxe:mac: "9c:b6:54:b2:b0:ca"root_device:serial: "4C530001220528100484"instance_info:image_source: "http://172.19.74.1:8089/debian-12-generic-amd64.qcow2"image_checksum: "http://172.19.74.1:8089/CHECKSUM"network_data:links:- id: "oob0"type: "phy"ethernet_mac_address: "9c:b6:54:b2:b0:ca"- id: "lan0"type: "phy"ethernet_mac_address: "9c:b6:54:b2:b0:cb"networks:- id: "oob"type: "ipv4_dhcp"link: "oob0"network_id: "oob"- id: "lan"type: "ipv4"link: "lan0"ip_address: "192.168.0.11"netmask: "255.255.255.0"gateway: "192.168.0.254"network_id: "lan"services:- type: "dns"address: "8.8.8.8"- type: "dns"address: "8.8.4.4"注意:虽然 ansible_host变量是多余的(因为它可以从处理 network_data结构中派生),但最好保持其明确性,以提高 playbook 的可读性并避免复杂的 JSON 查询。如果您不想重复信息,您仍然需要指定用于连接的接口(例如ansible_link: “lan0″)以启用查询。 作为动态配置单个节点的变通方法,我们将把要应用于每个节点的 Ansible roles 列表放在ansible_roles变量中,如 `、deploy.yml playbook 中所定义:

# deploy.yml---- hosts: localhostconnection: localgather_facts: truetasks:- name: Provision baremetal nodesansible.builtin.include_role:name: ironicloop: "{{ nodes }}"loop_control:loop_var: node- hosts: ironicgather_facts: falsetasks:- name: Wait for node to become reachableansible.builtin.wait_for_connection:- name: Gather facts for first timeansible.builtin.setup:- name: Provision node {{ ansible_hostname }}ansible.builtin.include_role:name: "{{ role }}"loop: "{{ ansible_roles }}"loop_control:loop_var: role您会注意到有两个组(在 hosts:元素下)将对其执行操作: – localhost: 通过它,我们将为 config.yml 配置文件中定义的每个节点通过其 API 控制 OpenStack Ironic。 – ironic: 这是一个动态组,当第一个组中的 ironic角色完成一个节点的操作系统部署时,该组将形成。

4.4.4 “ironic” 角色

ironic角色(位于roles/ironic子目录中)包括注册阶段(使用 openstack.cloud.baremetal_node模块)和实际的**部署阶段(使用 openstack.cloud.baremetal_node_action模块)。它还处理将节点本身添加到 inventory 中的ironic组(使用 ansible.builtin.add_host模块):

# roles/ironic/tasks/main.yml---- name: "Enroll {{ node.name }}"register: baremetal_createopenstack.cloud.baremetal_node:cloud: "ironic-standalone"driver: "{{ node.bmc.driver }}"driver_info: "{{ node.bmc.driver_info }}"name: "{{ node.name }}"uuid: "{{ node.uuid|default(omit) }}"nics:- mac: "{{ node.pxe.mac }}"properties:capabilities: "boot_option:local"root_device: "{{ node.root_device }}"- name: "Deploy {{ node.name }}"when: baremetal_create is changedopenstack.cloud.baremetal_node_action:cloud: "ironic-standalone"id: "{{ baremetal_create.node.id }}"timeout: 1800instance_info: "{{ node.instance_info }}"config_drive:meta_data:uuid: "{{ baremetal_create.node.id }}"hostname: "{{ node.name }}"user_data: "{{ cloud_config }}"network_data: "{{ node.network_data }}"- name: "Add {{ node.name }} to inventory"ansible.builtin.add_host:name: "{{ node.name }}"groups: ironicansible_host: "{{ node.ansible_host }}"ansible_user: "{{ default_user }}"ansible_ssh_extra_args: "-o StrictHostKeyChecking=no"ansible_roles: "{{ node.ansible_roles }}"Openstack.Cloud(https://docs.ansible.com/ansible/latest/collections/openstack/cloud/index.html) 集合中的每个模块都使用由 cloud变量引用的连接参数,该变量可在 ~/.config/openstack/clouds.yaml文件中找到(如[第一部分]的第 2.6 章,OpenStack Ironic “独立”配置中所述)。 或者,您也可以不使用 cloud参数,而是明确指定连接参数:

- name: ...openstack.cloud.baremetal_...:auth:endpoint: "http://172.19.74.1:6385"auth_type: None...注意:额外的参数-o StrictHostKeyChecking=no是必需的,因为每次部署时随机生成的 SSH 服务器证书可能会重叠(如果您删除然后用相同的 IP 地址重新部署同一台服务器,SSH 服务器证书将不相同)。 创建ironic系统用户和防止 swap 区域激活(Kubernetes 要求)是通过使用config.yml文件中定义的cloud_config 变量的 user_data来解决的。

4.2.3 “k8s-aio” 角色

k8s-aio角色(在roles/k8s-aio子目录中)的结构旨在重现官方文档使用 kubeadm 引导集群

(https://kubernetes.io/docs/setup/production/audited/kubeadm/))中描述的 Kubernetes 安装阶段,此外还包括 Flannel CNI和Nginx ingress controller。

对于需要此角色的每个物理节点,prepare.yml和 install.yml中包含的操作将以特权用户权限相继执行,而 configure.yml将作为 ironic用户执行:

# roles/k8s-aio/tasks/main.yml---- name: Prepare serveransible.builtin.include_tasks:file: prepare.ymlapply:become: true- name: Install Kubernetesansible.builtin.include_tasks:file: install.ymlapply:become: true- name: Configure Kubernetesansible.builtin.include_tasks:file: configure.ymlprepare.yml文件专注于系统要求,例如要加载的内核模块、网络参数和必要的软件包: # roles/k8s-aio/tasks/prepare.yml---- name: Enable Kernel modulescommunity.general.modprobe:name: "{{ item }}"state: presentpersistent: presentloop:- overlay- br_netfilter- name: Setup networking stackansible.posix.sysctl:name: "{{ item.name }}"value: "{{ item.value }}"sysctl_file: /etc/sysctl.d/kubernetes.confloop:- name: net.bridge.bridge-nf-call-ip6tablesvalue: 1- name: net.bridge.bridge-nf-call-iptablesvalue: 1- name: net.ipv4.ip_forwardvalue: 1- name: Install dependenciesansible.builtin.apt:pkg:- apt-transport-https- ca-certificates- containerd- curl- gpg- reserializeupdate_cache: yes安装阶段遵循文档中描述的规则,最终形成一个可以通过其标准 API 响应的单节点 Kubernetes 集群: # roles/k8s-aio/tasks/install.yml---- name: Download the public key for Kubernetes repositoryansible.builtin.shell: |curl -fsSL {{ k8s_pkgs_url }}/Release.key | \gpg --dearmor -o /etc/apt/keyrings/kubernetes.gpgargs:creates: /etc/apt/keyrings/kubernetes.gpg- name: Add Kubernetes APT repositoryansible.builtin.apt_repository:repo: "deb [signed-by=/etc/apt/keyrings/kubernetes.gpg] {{ k8s_pkgs_url }} /"filename: kubernetesstate: present- name: Install Kubernetes packagesansible.builtin.apt:pkg:- jq- kubeadm- kubectl- kubelet- name: Check SystemdCgroup in containerdregister: systemd_cgroupansible.builtin.shell:crictl info | \jq -r '.config.containerd.runtimes.runc.options.SystemdCgroup'- name: Enable SystemdCgroup in containerdwhen: systemd_cgroup.stdout == "false"ansible.builtin.shell: |containerd config default | \reserialize toml2json | \jq '.plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options.SystemdCgroup=true' | \reserialize json2toml >/etc/containerd/config.toml- name: Restart containerd servicewhen: systemd_cgroup.stdout == "false"ansible.builtin.systemd_service:name: containerd.servicestate: restarted- name: Pull the required Kubernetes imagesansible.builtin.command:cmd: kubeadm config images pullcreates: /etc/kubernetes/admin.conf- name: Initialize Kubernetesansible.builtin.command:cmd: kubeadm init --pod-network-cidr={{ k8s_network }} --control-plane-endpoint {{ ansible_host }}:6443creates: /etc/kubernetes/admin.conf最后,在配置阶段,非管理用户通过安装Flannel和Ingress-Nginx来完成 Kubernetes 的设置: # roles/k8s-aio/tasks/configure.yml---- name: Read /etc/kubernetes/admin.confbecome: trueregister: kube_confansible.builtin.slurp:src: /etc/kubernetes/admin.conf- name: Create ~/.kubeansible.builtin.file:path: ~/.kubestate: directorymode: 0700- name: Create ~/.kube/configansible.builtin.copy:content: "{{ kube_conf['content']|b64decode }}"dest: "~/.kube/config"mode: 0600- name: Install Flannel# https://github.com/flannel-io/flannel?tab=readme-ov-file#deploying-flannel-with-kubectlansible.builtin.command:cmd: kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml- name: Remove NoSchedule taint for control-planefailed_when: falseansible.builtin.command:cmd: kubectl taint nodes {{ ansible_hostname }} node-role.kubernetes.io/control-plane:NoSchedule-- name: Install Ingress-Nginx Controlleransible.builtin.command:cmd: kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yamlk8s-aio角色的一些默认值可以在roles/k8s-aio/defaults/main.yml文件中找到: # roles/k8s-aio/defaults/main.yml---k8s_version: "1.33"k8s_network: "10.244.0.0/16"k8s_pkgs_url: "https://pkgs.k8s.io/core:/stable:/v{{ k8s_version }}/deb"playbook 以全局变量文件group_vars/all.yml结尾,该文件包含公共 SSH 密钥的默认值、要创建的用户的默认名称、user_data的内容以及节点列表的初始化: # group_vars/all.yml---public_key: "{{ lookup('file', '~/.ssh/id_rsa.pub') }}"default_user: ironiccloud_config: |#cloud-configusers:- name: {{ default_user }}shell: /bin/bashsudo: ['ALL=(ALL) NOPASSWD:ALL']ssh_authorized_keys:- '{{ public_key }}'nodes: []4.2.4 部署 那么,让我们用ansible-playbook -e @config.yml deploy.yaml命令来启动 playbook:

一些思考 尽管 OpenStack Ironic 是在“独立”模式下配置的,但包含 cloud-init 的 OS cloud-ready 镜像的可用性,结合基础设施即代码 (IaC)和自动化系统的使用,使得实现有效的硬件生命周期管理成为可能,其中也包括了应用层。 如有相关问题,请在文章后面给小编留言,小编安排作者第一时间和您联系,为您答疑解惑。

云和安全管理服务专家