一. Masakari介绍

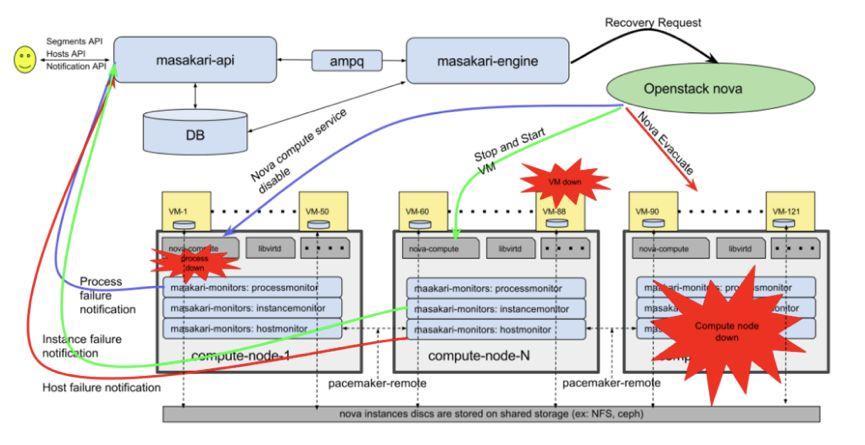

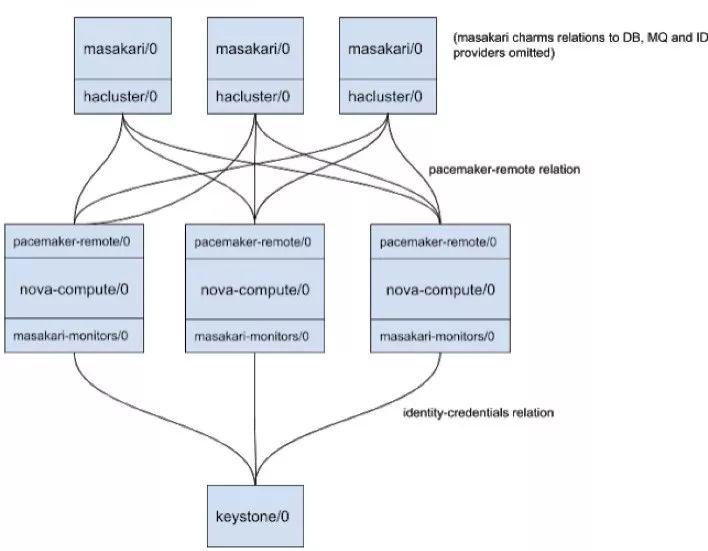

二. Masakari的架构与原理

-

masakari-api: 运行在控制节,提供服务api。通过RPC它将发送到的处理API请求交由masakari-engine处理。

-

masakari-engine: 运行在控制节点,通过以异步方式执行恢复工作流来处理收到的masakari-api发送的通知。

-

masakari-instancemonitor : 运行在计算节点,属于masakari-monitor,检测虚拟机进程是否挂掉了。

-

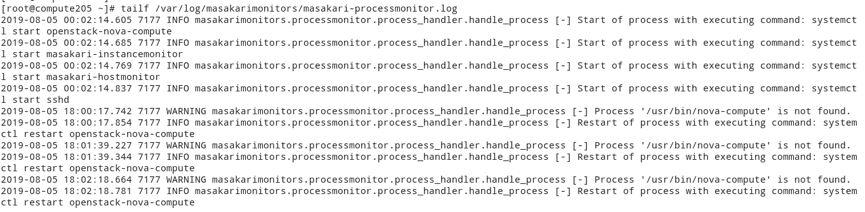

masakari-processmonitor : 运行在计算节点,属于masakari-monitor,检测Nova-compute是否挂了。

-

masakari-hostmonitor : 运行在计算节点,属于masakari-monitor,检测计算节点是否挂了。

-

masakari-introspectiveinstancemonitor:运行在计算节点,属于masakari-monitor,当虚拟机安装了qemu-ga,可用于检测以及启动回复故障进程或服务。

-

pacemaker-remote:运行在计算节点,解决corosync/pacemaker的16个节点的限制。

三. Pacemaker-remote介绍

四. STONITH介绍

五. Masakari控制节点安装

1. Masakari-api与Masakari-engine安装

1) 创建masakari用户

openstack user create --password-promptmasakari(give password as masakari)

2) 将管理员角色添加到masakari用户

openstackroleadd--projectservice--usermasakariadmin

3) 创建新服务

openstack service create --name masakari--description “masakari high availability” instance-ha

4) 为masakari服务创建endpoint

openstack endpoint create --regionRegionOne masakari public http://controller:15868/v1/%(tenant_id)sopenstack endpoint create --regionRegionOne masakari admin http://controller:15868/v1/%(tenant_id)sopenstack endpoint create --regionRegionOne masakari internal http://controller:15868/v1/%(tenant_id)s

5) 下载对应版本的Masakari安装包

git clone -b stable/steinhttps://github.com/openstack/masakari.git

6) 从masakari运行setup.py

sudo python setup.py install

7) 创建对应目录

useradd -r -d/var/lib/masakari -c "Masakari instance-ha" -m -s /sbin/nologinmasakarimkdir -pv /etc/masakarimkdir -pv /var/log/masakarichown masakari:masakari -R/var/log/masakarichown masakari:masakari -R /etc/masakari

8) 生成配置文件模板

tox -egenconfig

9) 复制配置文件

从目录masakari/etc/复制配置文件masakari.conf,api-paste.ini,policy.json到/etc/masakari文件夹。

10)生成system配置文件

本次以root用户运行,实际生产环境建议使用masakari用户运行。

cat/usr/lib/systemd/system/masakari-api.service[Unit]Description=Masakari Api[Service]Type=simpleUser=rootGroup=rootExecStart=/usr/bin/masakari-api[Install]WantedBy=multi-user.targetCat /usr/lib/systemd/system/masakari-engine.service[Unit]Description=Masakari engine[Service]Type=simpleUser=rootGroup=rootExecStart=/usr/bin/masakari-engine[Install]WantedBy=multi-user.target

11) 修改配置文件

cat /etc/masakari/masakari.conf [DEFAULT]auth_strategy = keystonemasakari_topic = ha_enginenotification_driver = taskflow_drivernova_catalog_admin_info = compute:nova:adminURLos_region_name = RegionOneos_privileged_user_name = masakarios_privileged_user_password = tyun123os_privileged_user_tenant = servicesos_privileged_user_auth_url = https://10.0.5.210:35357os_user_domain_name = defaultos_project_domain_name = defaultperiodic_enable = trueuse_ssl = falsemasakari_api_listen = 10.0.5.201masakari_api_listen_port = 15868masakari_api_workers = 3log_dir = /var/log/masakaritransport_url=rabbit://guest:guest@controller201:5672,guest:guest@controller202:5672,guest:guest@controller203:5672rpc_backend = rabbitcontrol_exchange = openstackapi_paste_config = /etc/masakari/api-paste.ini[database]connection = mysql+pymysql://masakari:tyun123@10.0.5.210/masakari?charset=utf8[host_failure]evacuate_all_instances = Trueignore_instances_in_error_state = falseadd_reserved_host_to_aggregate = false[instance_failure]process_all_instances = true[keystone_authtoken]memcache_security_strategy = ENCRYPTmemcache_secret_key = I2Ws13eKT0cQIJJQzX2AtI2aQW6x4vSQdmsqCuBfmemcached_servers = controller201:11211,controller202:11211,controller203:11211www_authenticate_uri = https://10.0.5.210:5000auth_url=https://10.0.5.210:35357auth_uri=https://10.0.5.210:5000auth_type = passwordproject_domain_id = defaultuser_domain_id = defaultproject_name = serviceusername = masakaripassword = tyun123user_domain_name=Defaultproject_domain_name=Defaultauth_version = 3service_token_roles = service[oslo_messaging_notifications]transport_url=rabbit://guest:guest@controller201:5672,guest:guest@controller202:5672,guest:guest@controller203:5672

12) 启动masakari

chown masakari:masakari -R/var/log/masakarichown masakari:masakari -R /etc/masakarisystemctl start masakar-api masakari-enginesystemctl enable masakar-api masakari-engine

2. python-masakariclient安装

1) 下载python-masakariclient

https://github.com/openstack/python-masakariclient.git

2) 安装依赖

cd python-masakariclient/pip install -r requirements.txt

3) python-masakariclient安装

python setup.py install

3. masakari-dashboard安装

1) 下载masakari-dashboard

git clonehttps://github.com/openstack/masakari-dashboard

2) Masakari安装

cd masakari-dashboard && pipinstall -r requirements.txt python setup.py install

3) 复制dashboard模块文件

cp../masakari-dashboard/masakaridashboard/local/enabled/_50_masakaridashboard.pyopenstack_dashboard/local/enabledcp../masakari-dashboard/masakaridashboard/local/local_settings.d/_50_masakari.pyopenstack_dashboard/local/local_settings.dcp../masakari-dashboard/masakaridashboard/conf/masakari_policy.json/etc/openstack-dashboard

4) 重启httpd服务

六. Masakari计算节点安装

1. pacemaker-remote安装

1) 安装pacemaker-remote

yum install pacemaker-remote resource-agents fence-agents-all pcsd

2) pacemaker authkey复制

将控制节点的/etc/pacemaker/authkey复制到计算节点/etc/pacemaker/authkey。

3) 启动pacemaker-remote服务

systemctl startpcsd Systemctl enable pcsd

4) 克隆masakari使用:

5) 创建相应的目录

useradd -r -d /var/lib/masakarimonitors-c "Masakari instance-ha" -m -s /sbin/nologin masakarimonitorsmkdir -pv /etc/masakarimonitorsmkdir -pv /var/log/masakarimonitorschown masakarimonitors:masakarimonitors -R /etc/masakarimonitorschown masakarimonitors:masakarimonitors -R /var/log/masakarimonitors

6) 从masakari-monitors运行setup.py:

sudo python setup.py install

7) 配置文件生成

tox -egenconfig

8) 要运行masakari-processmonitor,masakari-hostmonitor和masakari-instancemonitor只需使用以下二进制文件:

-

masakari-processmonitor

-

masakari-hostmonitor

-

masakari-instancemonitor

9) 生成system配置文件本次以root用户运行,实际生产环境建议使用masakari用户运行。

cat/usr/lib/systemd/system/masakari-hostmonitor.service[Unit]Description=Masakari Hostmonitor[Service]Type=simpleUser=rootGroup=rootExecStart=/usr/bin/masakari-hostmonitor[Install]WantedBy=multi-user.target cat /usr/lib/systemd/system/masakari-instancemonitor.service[Unit]Description=Masakari Instancemonitor[Service]Type=simpleUser=rootGroup=rootExecStart=/usr/bin/masakari-instancemonitor[Install]WantedBy=multi-user.target# /usr/lib/systemd/system/masakari-processmonitor.service[Unit]Description=Masakari Processmonitor[Service]Type=simpleUser=rootGroup=rootExecStart=/usr/bin/masakari-processmonitor[Install]WantedBy=multi-user.target

10) Masakari-monitor相信配置文件

(1) cat/etc/masakarimonitors/masakarimonitors.conf

[DEFAULT]host = compute205log_dir = /var/log/masakarimonitors[api]region = RegionOneapi_version = v1api_interface = publicwww_authenticate_uri = https://10.0.5.210:5000auth_url = https://10.0.5.210:35357auth_uri = https://10.0.5.210:5000auth_type = passworddomain_name=Defaultproject_domain_id = defaultuser_domain_id = defaultproject_name = serviceusername = masakaripassword = tyun123project_domain_name = Defaultuser_domain_name = Default[host]monitoring_driver = defaultmonitoring_interval = 120disable_ipmi_check = Falserestrict_to_remotes = Truecorosync_multicast_interfaces = eth0corosync_multicast_ports = 5405[process]process_list_path = /etc/masakarimonitors/process_list.yaml

(2) cat /etc/masakarimonitors/hostmonitor.conf

MONITOR_INTERVAL=120NOTICE_TIMEOUT=30NOTICE_RETRY_COUNT=3NOTICE_RETRY_INTERVAL=3STONITH_WAIT=30STONITH_TYPE=ipmiMAX_CHILD_PROCESS=3TCPDUMP_TIMEOUT=10IPMI_TIMEOUT=5IPMI_RETRY_MAX=3IPMI_RETRY_INTERVAL=30HA_CONF="/etc/corosync/corosync.conf"LOG_LEVEL="debug"DOMAIN="Default"ADMIN_USER="masakari"ADMIN_PASS="tyun123"PROJECT="service"REGION="RegionOne"AUTH_URL="https://10.0.5.210:5000/"IGNORE_RESOURCE_GROUP_NAME_PATTERN="stonith"

(3) cat /etc/masakarimonitors/proc.list

01,/usr/sbin/libvirtd,sudo service libvirtd start,sudo servicelibvirtd start,,,,02,/usr/bin/python /usr/bin/masakari-instancemonitor,sudo servicemasakari-instancemonitor start,sudo service masakari-instancemonitor start,,,,

(4) cat/etc/masakarimonitors/processmonitor.conf

PROCESS_CHECK_INTERVAL=5PROCESS_REBOOT_RETRY=3REBOOT_INTERVAL=5MASAKARI_API_SEND_TIMEOUT=10MASAKARI_API_SEND_RETRY=12MASAKARI_API_SEND_DELAY=10LOG_LEVEL="debug"DOMAIN="Default"PROJECT="service"ADMIN_USER="masakari"ADMIN_PASS="tyun123"AUTH_URL="https://10.0.5.210:5000/"REGION="RegionOne"

(5) cat/etc/masakarimonitors/process_list.yaml

# libvirt-binprocess_name: /usr/sbin/libvirtdstart_command: systemctl start libvirtdpre_start_command:post_start_command:restart_command: systemctl restart libvirtdpre_restart_command:post_restart_command:run_as_root: True-# nova-computeprocess_name: /usr/bin/nova-computestart_command: systemctl start openstack-nova-computepre_start_command:post_start_command:restart_command: systemctl restart openstack-nova-computepre_restart_command:post_restart_command:run_as_root: True-# instancemonitorprocess_name: /usr/bin/python /usr/bin/masakari-instancemonitorstart_command: systemctl start masakari-instancemonitorpre_start_command:post_start_command:restart_command: systemctl restart masakari-instancemonitorpre_restart_command:post_restart_command:run_as_root: True-# hostmonitorprocess_name: /usr/bin/python /usr/bin/masakari-hostmonitorstart_command: systemctl start masakari-hostmonitorpre_start_command:post_start_command:restart_command: systemctl restart masakari-hostmonitorpre_restart_command:post_restart_command:run_as_root: True-# sshdprocess_name: /usr/sbin/sshdstart_command: systemctl start sshdpre_start_command:post_start_command:restart_command: systemctl restart sshdpre_restart_command:post_restart_command:run_as_root: True

11) 启动masakari-monitor服务

systemctl start masakari-hostmonitor.service masakari-instancemonitor.servicemasakari-processmonitor.servicesystemctlenablemasakari-hostmonitor.servicemasakari-instancemonitor.servicemasakari-processmonitor.service

七. Pacemaker 配置

具体步骤如下:

1) 在三个节点上安装以下安装包

yum install -y lvm2 cifs-utils quota psmiscyum install -y pcs pacemaker corosync fence-agents-all resource-agentscrmsh

2) 在计算节点上安装以下安装包

yum install -y pacemaker-remote pcsd fence-agents-all resource-agents

3) 在所有节点上设置pcs服务开机启动

systemctl enable pcsd.servicesystemctl start pcsd.service

4) 在三个节点上设置hacluster用户密码

passwd hacluster New password ####设置密码为yjscloud Retry new passwordpcs cluster auth controller201 controller202 controller203 compute205 compute206 compute207

5) 创建并启动名为my_cluster的集群,其中controller201,controller202,controller203为集群成员

pcs cluster setup --start --name openstack controller1 controller2controller3

6) 置集群自启动

pcs cluster enable --all

7) 查看集群状态

pcs cluster statusps aux | grep pacemaker

8) 检验Corosync的安装及当前corosync状态:

corosync-cfgtool -scorosync-cmapctl| grep memberspcs status corosync

9) 检查配置是否正确(假若没有输出任何则配置正确):

crm_verify -L -V

10) 错误可暂时禁用STONITH:

pcs property set stonith-enabled=false

11) 无法仲裁时候,选择忽略:

pcs property set no-quorum-policy=ignore

12) 添加pacemaker-remote 节点

pcs cluster node add-remote compute205compute205pcs cluster node add-remote compute206compute206pcs cluster node add-remote compute207compute207

13) 如果OpenStack 使用pacemaker作为高可用方式,可以设置如下:

# 标注节点属性,区分计算节点于控制节点pcs property set --node controller201node_role=controllerpcs property set --node controller202node_role=controllerpcs property set --node controller203node_role=controllerpcs property set --node compute205node_role=computepcs property set --node compute206node_role=computepcs property set --node compute206node_role=compute# 创建VIP资源pcs resource create vipocf:heartbeat:IPaddr2 ip=10.0.5.210 cidr_netmask=24 nic=eth0 op monitorinterval=30s# 绑定VIP到controller节点pcs constraint location vip ruleresource-discovery=exclusive score=0node_role eq controller --force# 创建haproxy资源pcs resource create lb-haproxysystemd:haproxy --clone# 绑定haproxy到controller节点pcs constraint location lb-haproxy-clonerule resource-discovery=exclusive score=0 node_role eq controller --force# 设置资源绑定到同一节点与设置启动顺序pcs constraint colocation addlb-haproxy-clone with vippcs constraint order start vip thenlb-haproxy-clone kind=Optional

14) 配置基于IPMI的stonith

本处的stonithaction为off关机,默认为reboot。pcs property set stonith-enabled=truepcs stonith create ipmi-fence-compute205fence_ipmilan lanplus='true' pcmk_host_list='compute205'pcmk_host_check='static-list'pcmk_off_action=off pcmk_reboot_action=offipaddr='10.0.5.18' ipport=15205 login='admin' passwd='password' lanplus=truepower_wait=4 op monitor interval=60s pcs stonith create ipmi-fence-compute206fence_ipmilan lanplus='true' pcmk_host_list='compute206'pcmk_host_check='static-list'pcmk_off_action=off pcmk_reboot_action=offipaddr='10.0.5.18' ipport=15206 login='admin' passwd='password' lanplus=truepower_wait=4 op monitor interval=60s pcs stonith create ipmi-fence-compute207fence_ipmilan lanplus='true' pcmk_host_list='compute207'pcmk_host_check='static-list'pcmk_off_action=off pcmk_reboot_action=offipaddr='10.0.5.18' ipport=15207 login='admin' passwd='password' lanplus=truepower_wait=4 op monitor interval=60s

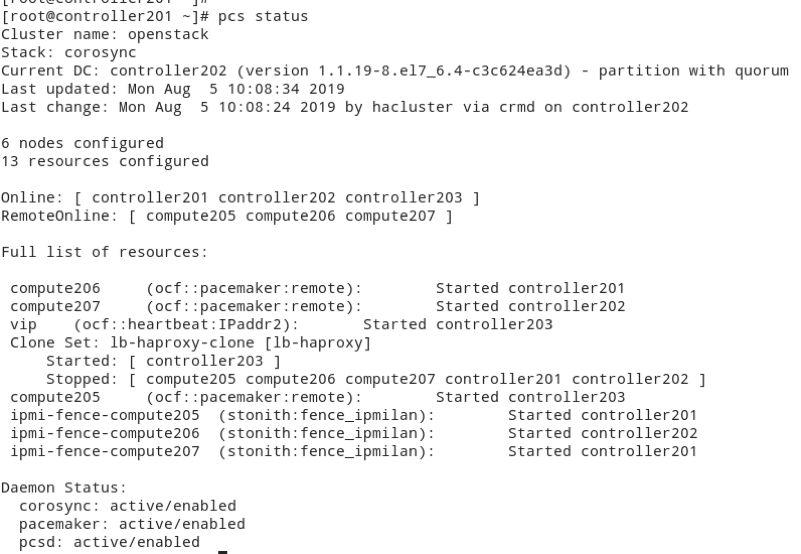

15) pcs 状态查看

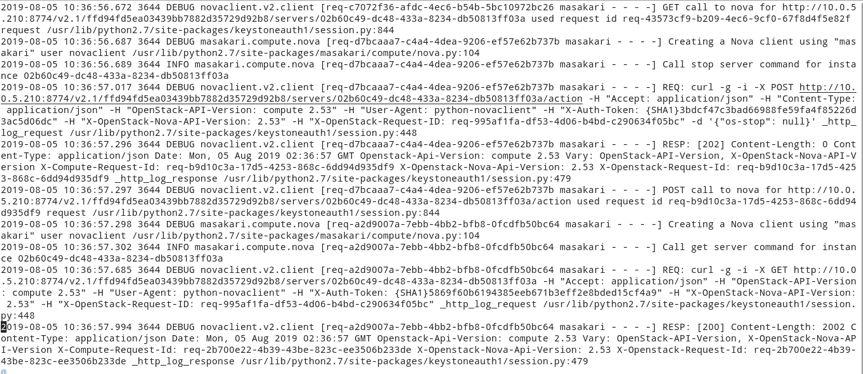

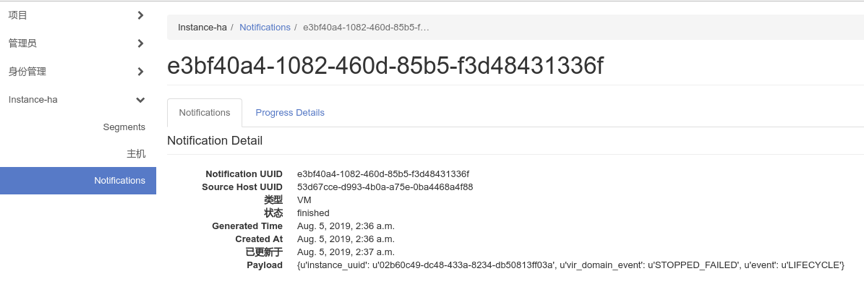

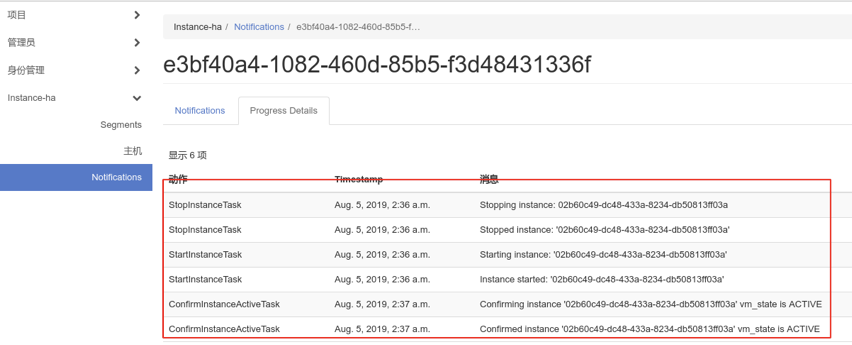

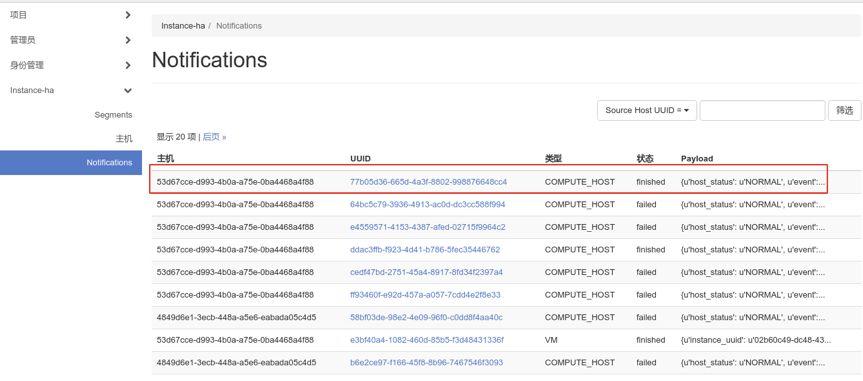

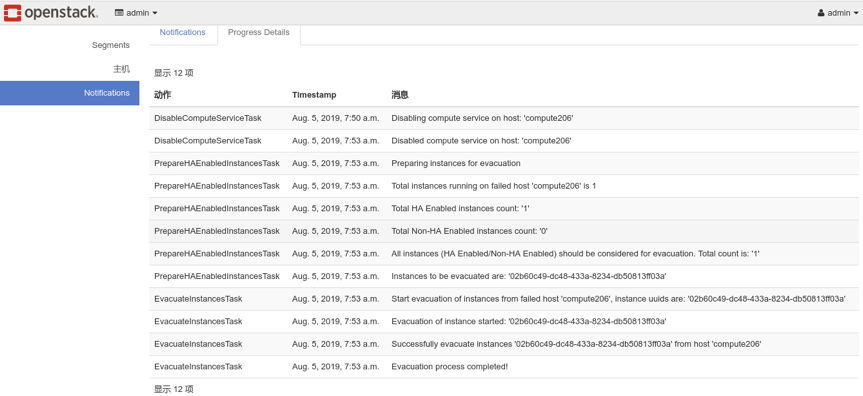

八. Masakari配置与测试

-

auto:Nova选择新的计算主机,用于疏散在失败的计算主机上运行的实例

-

reserved_host:segment中配置的其中一个保留主机将用于疏散在失败的计算主机上运行的实例

-

auto_priority:首先它会尝试’自动’恢复方法,如果它失败了,那么它会尝试使用’reserved_host’恢复方法。

-

rh_priority:它与’auto_priority’恢复方法完全相反。

请注意:Masakari目前不使用服务类型,但它是必填字段,因此默认值设置为’compute’且无法更改。

openstack segment createsegment1 auto COMPUTE+-----------------+--------------------------------------+| Field | Value |+-----------------+--------------------------------------+| created_at |2019-07-24T01:45:04.000000 || updated_at | None || uuid | 0f57ae64-88cc-4193-9aec-1c54c59b2bbd|| name |segment1 || description | None || id | 1 || service_type |COMPUTE || recovery_method | auto |+-----------------+--------------------------------------+

openstack segment host create compute205 COMPUTE SSH691b8ef3-7481-48b2-afb6-908a98c8a768 +---------------------+--------------------------------------+| Field |Value |+---------------------+--------------------------------------+| created_at |2019-07-25T12:18:58.000000 || updated_at |None || uuid |e1fd288c-a545-456e-81d5-64927b8cea04 || name |compute205 || type |COMPUTE || control_attributes |SSH || reserved |False || on_maintenance |False || failover_segment_id | 0f57ae64-88cc-4193-9aec-1c54c59b2bbd |+---------------------+--------------------------------------+

[]7571

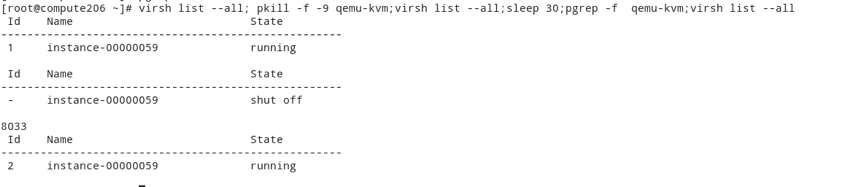

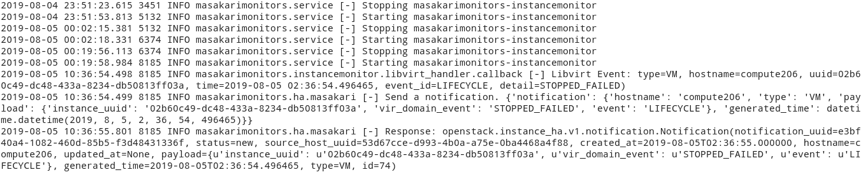

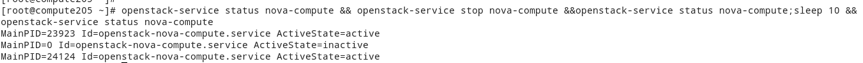

[root@controller201 ~(keystone_admin)]# openstack server show VM-1-c OS-EXT-SRV-ATTR:host -f valuecompute206[root@controller201 ~(keystone_admin)]# fence_ipmilan -P -A password-a 10.0.5.18 -p password -l admin -u 15206 -o statusStatus: ON[root@compute206 ~]# virsh list --all Id Name State---------------------------------------------------- 1 instance-00000059 running

[root@controller201 ~(keystone_admin)]#openstack server show VM-1 -c OS-EXT-SRV-ATTR:host -f valuecompute207[root@controller201 ~(keystone_admin)]#fence_ipmilan -P -A password -a 10.0.5.18 -p password -l admin -u 15206 -ostatusStatus: OFF[root@compute207 ~]# virsh list --all Id Name State---------------------------------------------------- 1 instance-0000005c running 2 instance-0000005f running 3 instance-00000059 running

九. 自定义恢复方式(Customized Recovery Workflow)

from oslo_log import log as loggingfrom taskflow import taskLOG = logging.getLogger(__name__)class Noop(task.Task):def __init__(self, novaclient):self.novaclient = novaclientsuper(Noop, self).__init__()def execute(self, **kwargs):LOG.info("Custom task executedsuccessfully..!!")return

masakari.task_flow.tasks = custom_pre_task = <custom_task_class_path_from_third_party_library> custom_main_task =<custom_task_class_path_from_third_party_library>custom_post_task=<custom_task_class_path_from_third_party_library>

注意:第三方库的setup.cfg中的入口点应与Masakari setup.cfg中的相同密钥用于相应的故障恢复。

host_auto_failure_recovery_tasks={'pre':['disable_compute_service_task','custom_pre_task'],'main':['custom_main_task','prepare_HA_enabled_instances_task'],'post':['evacuate_instances_task','custom_post_task']}

十. 其他

[host_failure]evacuate_all_instances=Falseignore_instances_in_error_state=Falseadd_reserved_host_to_aggregate=False[instance_failure]process_all_instances=True